'Models and Methods and Tools, Oh My!' by Hervé L'Hours

We aren’t short of challenges in the 4C project but trying to help the community with curation cost modelling carves out a space of its very own. We benefit from an excellent earlier analysis of current cost calculation models (http://www.4cproject.eu/community-resources/outputs-and-deliverables/d3-1-evaluation-of-cost-models-and-needs-gaps-analysis) but the task is now to provide a framework to support future research and development in the curation cost space.

How do we best develop the promised cost concept model and gateway specification into something which will support the harmonisation of different cost modelling approaches, often focussing on cost data from a single institution, to something more widely applicable?

Our Advisory Committee have advocated caution and focus, telling us that if we can provide a common vocabulary and promote a common understanding of basic cost concepts this will be a huge advance in the curation costing landscape. But how to communicate this clearly in such a complex space?

So what do we mean by Models and Methods and Tools?

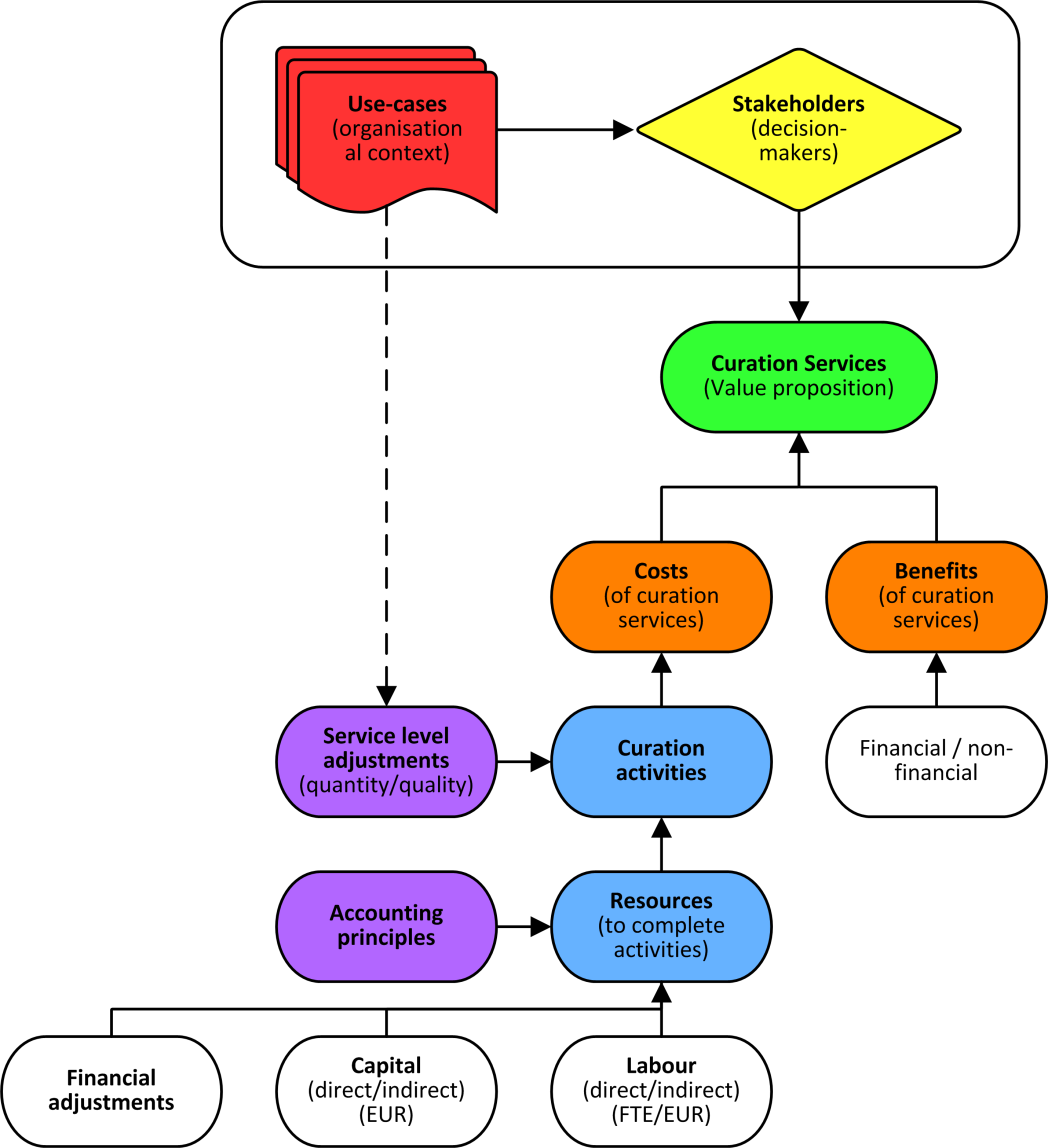

We’ve started with a simple mind-map of core cost concepts which directly or indirectly influence how we think about costs. These core concepts are those which remain stable across the organisational contexts of all our stakeholders and we’re taking the time to define them clearly. Our current thinking sets these out as curation services’ curation activities; the resources necessary to deliver those activities; and the stakeholder context necessary to understand and refine them. We’re then moving to describe the primary interactions between these core concepts.

Interactions between core cost concepts

We know that one gap in the current generation of cost models is effective documentation and hope this common set of concepts, definitions and relationships can be used by future cost model developers, improving the delivery of standardised supporting materials for users of methods and tools

Just as a map sacrifices detail to provide an efficient representation of the territory a model is providing a representation of some underlying universe, environment, organisation, product or system. It is the goal of a map or model that helps us decide the right level of abstraction, which simplifications are possible while still meeting the intended goals.

Development of a full methodology for calculating curation costs must identify a set of use cases, define the scope of their stakeholders, clarify the inputs and calculations necessary and then go beyond this core of concepts to identify a more detailed model capable of supporting their work. A wide range of cost data, calculations and comparisons are needed to support the curation community.

- Is the efficiency of my activities increasing year on year?

- What would I gain from automating or outsourcing some processes?

- What’s my overall cost per GB of data?

- How do I compare to organisations of similar size, mission or with similar collections to manage?

This strand of 4C work hopes to provide a framework so that potential users of various cost methods can understand what’s right for them and so that cost model developers can ensure that the outputs from their models are (potentially) comparable across different environments.

Organisations seldom manage their financial accounts with curation costing in mind. Even if they do, breaking down internal activities and resources accurately enough to plug into a cost methodology can be time-consuming. So every cost method (and its associated model) is a compromise, an attempt to extract only the necessary concepts, variables and contexts necessary to deliver the required answers. Highly detailed and granular approaches may yield more impressive results at first glance but that granularity may be at the expense of accuracy. Any method, but especially one claiming to offer a highly flexible approach supporting a wide range of stakeholders and use cases implies some risks. Those risks need to be identified and clearly communicated to users.

One approach to making methods more understandable and data entry and output more efficient is to offer a supporting tool. From paper guidance, to formulae-driven spreadsheets to fully-fledged applications the key is usability which (alongside effective documentation) can increase adoption, comprehension and accuracy. Usability implies that tools must be developed and tested with real users, in real world scenarios, with real and/or clearly representative data.

So a ‘cost method’ equates to the core concept model, plus extensions to cover the use cases and organisational/stakeholder context along with the calculations and comparisons necessary to answer the questions identified, all presented in a usable, clearly documented tool.

Most of us are comfortable editing values into a spreadsheet and they’re an affordable solution for most curation cost researchers but this still represents a parallel process for the curation community. It might be an issue for the future (via the 4C Roadmap) rather than something the current project can tackle but curation costing methods which extract data direct from accounting systems or from the increasingly rich curation and archiving products [1] would bring all of our modelling efforts a step closer to the real world.

P.S. Of course the easiest way to generate meaningful answers about curation cost is from accurate and representative models, methods and tools. But the easiest way to develop those models, methods and tools is to have access to data about curation costs. If you’d like to help break this vicious circle then consider having a look at the Curation Cost Exchange (CCEx) and sharing some of your numbers with the community.

Hervé L’Hours, UK Data Archive - University of Essex

Hervé is the Metadata Manager for the UK Data Archive with responsibility for business process classification activities in line with the OAIS reference model and is aligning the Archive’s work towards Data Documentation Initiative-Lifecycle (DDI-L) standardisations against the Metadata Encoding and Transmission (METS) standard and the PREMIS preservation metadata model. UKDA is responsible for leading task 3.3 to develop a cost concept model and gateway requirement specification.